Showing posts with label cloud. Show all posts

Showing posts with label cloud. Show all posts

Tuesday, November 3, 2015

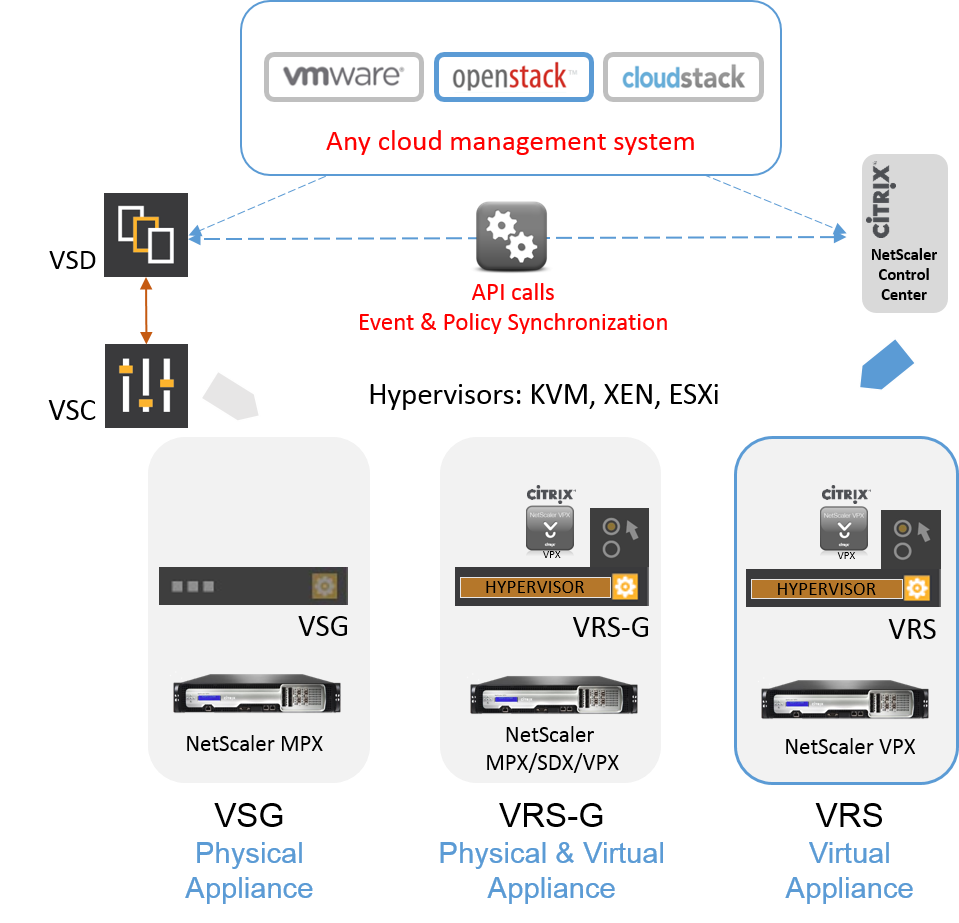

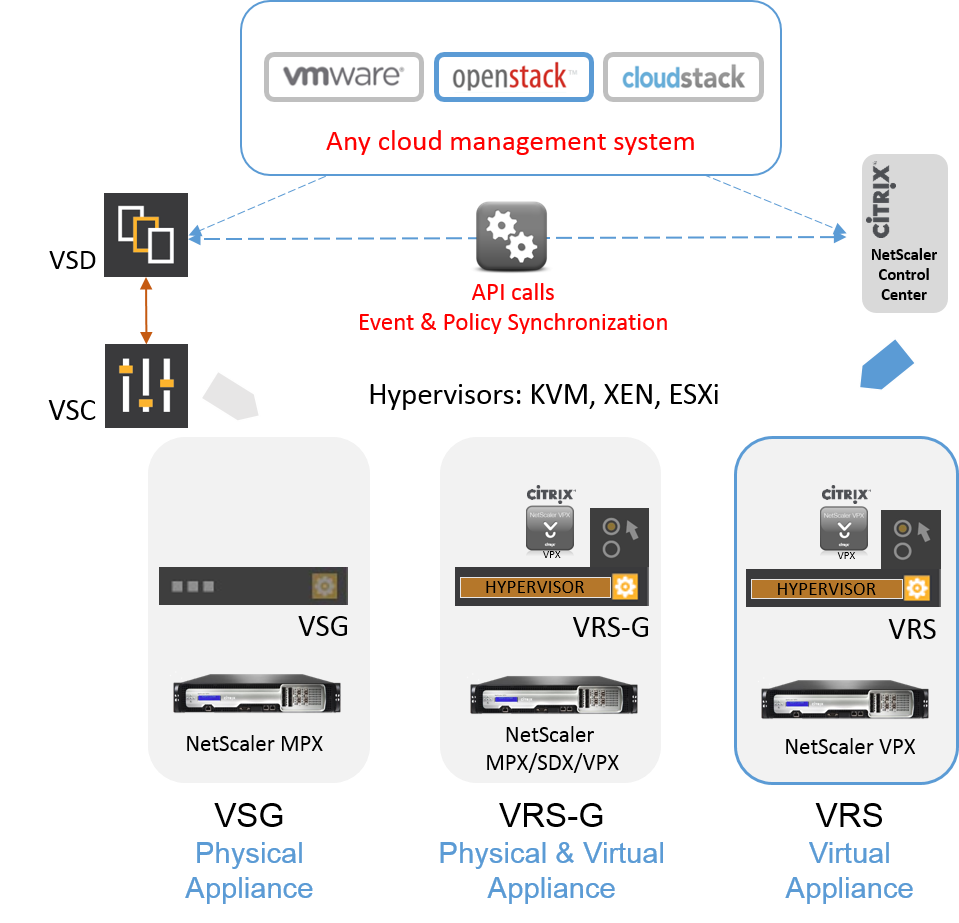

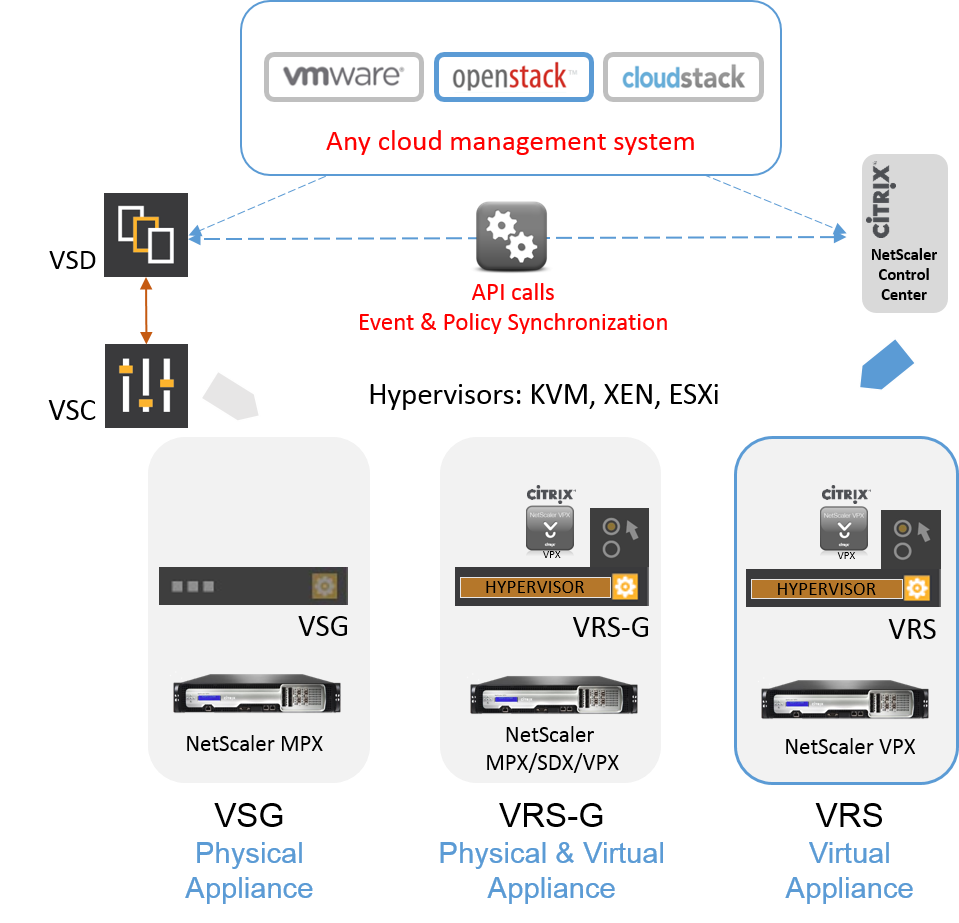

Nuage Networks and Citrix Collaborate for Application Delivery with SDN and OpenStack

The application landscape is changing. Enterprise organizations are deploying complex scale-out applications.

These applications have many components that have to work together. An application architecture can include a legacy component that resides on a mainframe, it can have services-oriented architecture components, and there can be new micro services that perform specialized tasks.

The network infrastructure is changing in response.

Most applications run on a virtual server infrastructure. Network services are being migrated to virtualized network infrastructure. Network services now exist as virtual appliances. Everything needs to interact, from the application components to the virtual network services.

To connect the components, virtual networks like the Nuage Networks Virtualized Services Platform (VSP) have been developed and organizations are using these to connect the pieces of the virtualized applications and the virtualized network components together.

Thursday, May 28, 2015

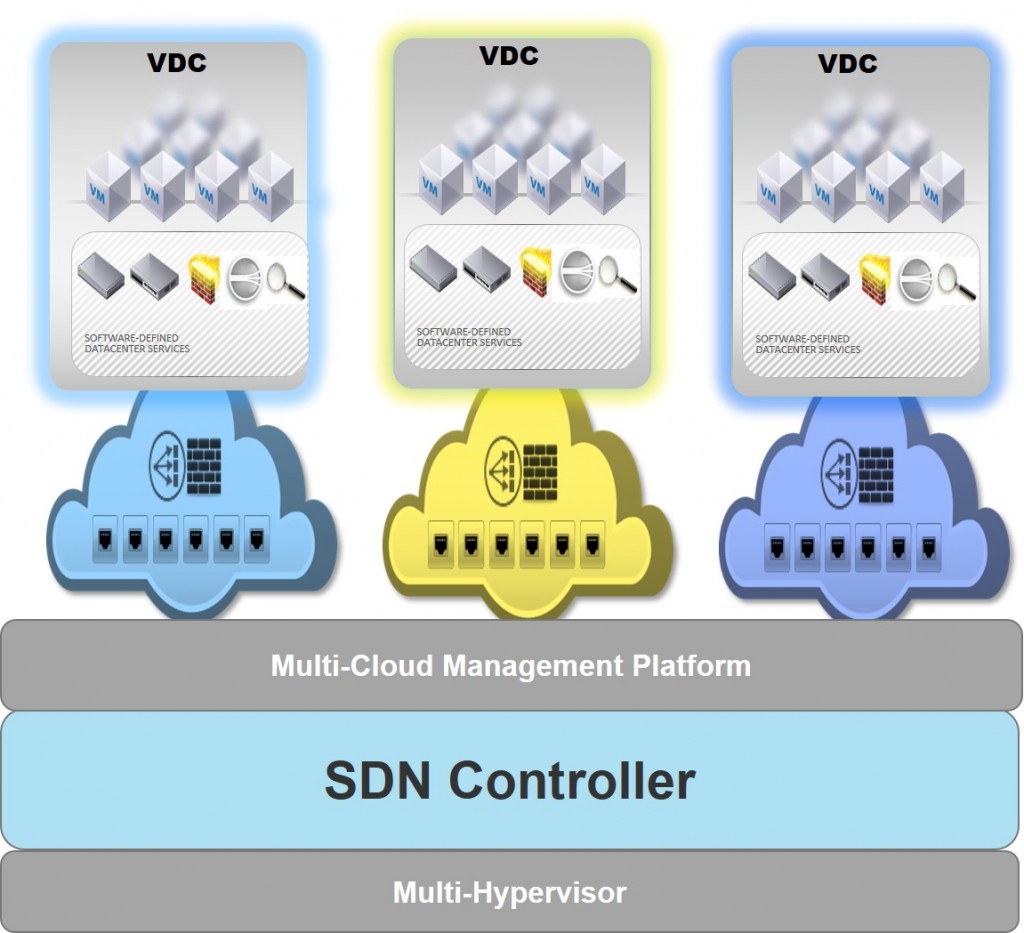

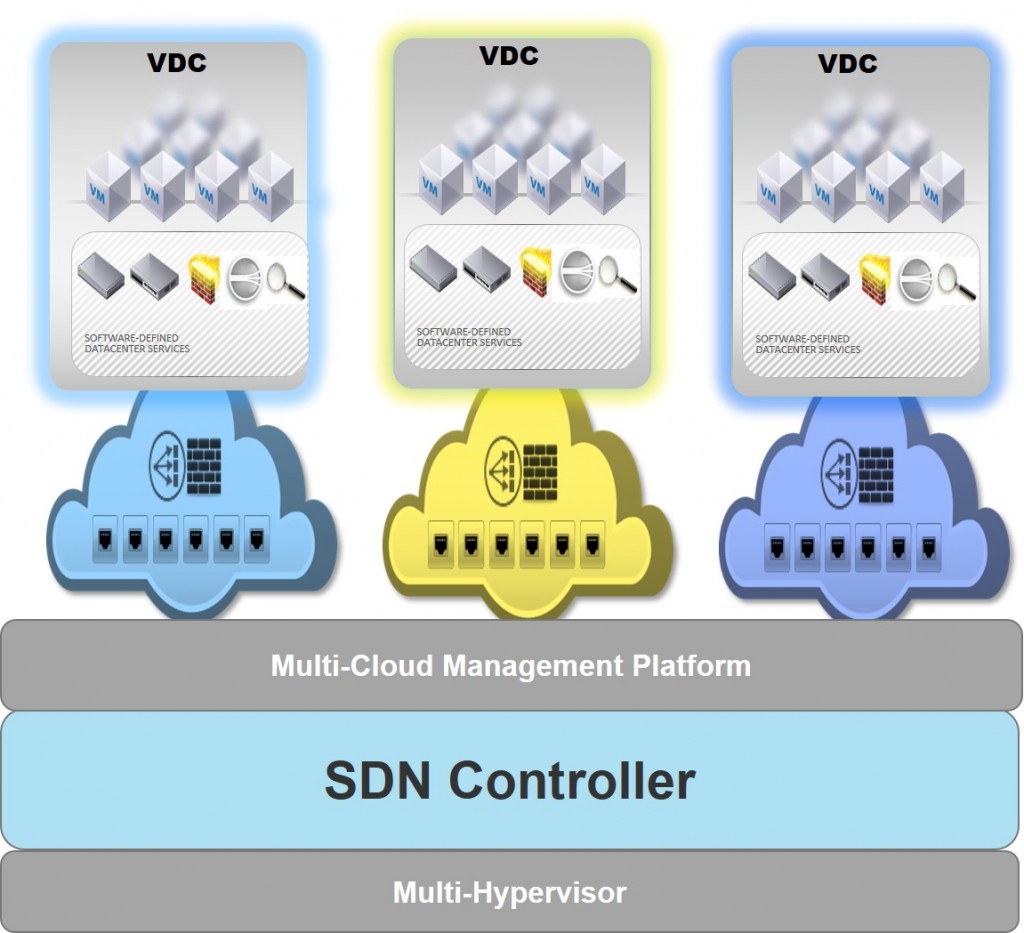

Leveraging Multi-tenancy in the ADC as a Way to the Cloud

Over the last few years, organizations have increasingly been shifting their data centers to a cloud-based model. This transition has been built upon virtualization, automation and orchestration of IT resources—mainly server, storage and switching infrastructure. The goal is to increase agility and reduce the costs of deploying and managing resources to support business applications.

As the transition to cloud-based data centers marches on, it is becoming apparent that organizations need to keep going after they virtualize their server, storage and switching infrastructures. To maximize device consolidation and increase flexibility and agility in deploying resources, other components instrumental to the security, performance and availability of the organization’s computing services need to take part in the transformation.

While increasing agility and reducing costs are worthwhile goals, there are additional concerns when it comes to supporting applications. In its report, “Cloud Service Strategies: North American Enterprise Survey, January 15, 2014,” Infonetics Research found that 79 percent of respondents want to improve application performance, 78 percent want to respond more quickly to business needs, 77 percent want to speed up application deployment and increase scalability, and 73 percent expect to reduce costs with cloud services.

As the transition to cloud-based data centers marches on, it is becoming apparent that organizations need to keep going after they virtualize their server, storage and switching infrastructures. To maximize device consolidation and increase flexibility and agility in deploying resources, other components instrumental to the security, performance and availability of the organization’s computing services need to take part in the transformation.

While increasing agility and reducing costs are worthwhile goals, there are additional concerns when it comes to supporting applications. In its report, “Cloud Service Strategies: North American Enterprise Survey, January 15, 2014,” Infonetics Research found that 79 percent of respondents want to improve application performance, 78 percent want to respond more quickly to business needs, 77 percent want to speed up application deployment and increase scalability, and 73 percent expect to reduce costs with cloud services.

Wednesday, May 27, 2015

Citrix NetScaler VPX on Microsoft Azure Accelerates Your Applications in the Cloud

Cloud computing offers a fundamental change in the way organizations deploy and accesses their business applications. Cloud computing is about leveraging resources over the network to enable an organization to work more efficiently and with greater agility. Getting the best results from applications in the cloud requires an application delivery controller (ADC) to provide an always-on, always-secure foundation to ensure performance. The ADC solution for the cloud needs to provide the ability to balance workloads and manage user traffic while providing granular visibility into application performance and control for reliable application delivery. To meet these needs Citrix and Microsoft have collaborated to make the Citrix NetScaler ADC available on Microsoft Azure.

Citrix NetScaler VPX for Microsoft Azure

Citrix NetScaler VPX is a virtual appliance for L4-7 networking services that ensures organizations have access to applications deployed in the cloud that is secure and optimized for high performance. VPX supports widely deployed applications such as Citrix XenApp® and Citrix XenDesktop®, as well as specific workloads including Microsoft Exchange, SQL, and SharePoint. VPX provides a networking foundation that ensures scalability, adjusting to the changing needs of a cloud environment without the physical limitations. A superior user experience on Microsoft applications and workloads is delivered through performance enhancements such as compressing, load balancing, and SSL acceleration. VPX provides a set of capabilities to ensure availability and to keep applications connected and protected. Advanced security capabilities ensure against attacks that can disable your applications or put your data in danger. VPX allows organizations to securely connect to their environments from anywhere from any device. VPX puts you in control of applications with the visibility required to keep your applications performing.

NetScaler routes traffic in and out of the Azure Virtual Network with two or more subnets within each virtual network. Customers can leverage Network Security Groups to control network traffic by providing a filter for each VM within a subnet. Each virtual network has a single NetScaler VPX deployed across it.

Citrix NetScaler VPX for Microsoft Azure

Citrix NetScaler VPX is a virtual appliance for L4-7 networking services that ensures organizations have access to applications deployed in the cloud that is secure and optimized for high performance. VPX supports widely deployed applications such as Citrix XenApp® and Citrix XenDesktop®, as well as specific workloads including Microsoft Exchange, SQL, and SharePoint. VPX provides a networking foundation that ensures scalability, adjusting to the changing needs of a cloud environment without the physical limitations. A superior user experience on Microsoft applications and workloads is delivered through performance enhancements such as compressing, load balancing, and SSL acceleration. VPX provides a set of capabilities to ensure availability and to keep applications connected and protected. Advanced security capabilities ensure against attacks that can disable your applications or put your data in danger. VPX allows organizations to securely connect to their environments from anywhere from any device. VPX puts you in control of applications with the visibility required to keep your applications performing.

NetScaler routes traffic in and out of the Azure Virtual Network with two or more subnets within each virtual network. Customers can leverage Network Security Groups to control network traffic by providing a filter for each VM within a subnet. Each virtual network has a single NetScaler VPX deployed across it.

Friday, May 15, 2015

Using Application Delivery Services to Build Scalable OpenStack Clouds

As your organization seeks to increase IT agility and reduce operating costs building an orchestration platform like OpenStack to automate the deployment of resources makes a lot of sense. As you plan the implementation of your OpenStack platform ensuring application availability and performance is a necessary design goal. There are a number of things to consider to this end, for example how do you minimize downtime, or support your legacy applications, as well as your applications that are built for the cloud. You might need to host multiple tenants on your cloud platform, and deliver performance SLAs to them. Larger application deployments might require extending cloud platform services to multiple locations.

To ensure a successful implementation of OpenStack you need design recommendations around best practices for multi-zone and multi-region cloud architectures. There are two major areas to look at. One is resource segregation or ‘pooling’ and the use of cloud platform constructs such as availability zones and host aggregates to group infrastructure into fault domains and high-availability domains. The other is how to use an ADC to provide highly available, highly performant, application delivery and load balancing services in your distributed, multi-tenant, fault-tolerant cloud architecture.

Best Practices for Multi-Zone and Multi-Region Cloud Integration

It’s easier to build resilient and scalable OpenStack data centers if three best-practice rules are applied in planning:

•Segregate physical resources to create fault domains, and plan to mark these as OpenStack Availability Zones.

•Distribute OpenStack and database controllers across three or more adjacent fault domains to create a resilient cluster.

•Networks - Design considerations should include both data plane and control plane networking criteria for scale out and high-availability.

To ensure a successful implementation of OpenStack you need design recommendations around best practices for multi-zone and multi-region cloud architectures. There are two major areas to look at. One is resource segregation or ‘pooling’ and the use of cloud platform constructs such as availability zones and host aggregates to group infrastructure into fault domains and high-availability domains. The other is how to use an ADC to provide highly available, highly performant, application delivery and load balancing services in your distributed, multi-tenant, fault-tolerant cloud architecture.

Best Practices for Multi-Zone and Multi-Region Cloud Integration

It’s easier to build resilient and scalable OpenStack data centers if three best-practice rules are applied in planning:

•Segregate physical resources to create fault domains, and plan to mark these as OpenStack Availability Zones.

•Distribute OpenStack and database controllers across three or more adjacent fault domains to create a resilient cluster.

•Networks - Design considerations should include both data plane and control plane networking criteria for scale out and high-availability.

Wednesday, July 3, 2013

A Practical Look at SDN Software and Hardware Considerations

The Opportunity for SDN

Software Defined Networking represents the biggest change to the network in many years. What makes SDN interesting is the transformation that it can enable. Businesses are looking for more control over their applications on the network. SDN promises to deliver agility and simplification in the network to support applications. With SDN, the network becomes more efficient and agile, and an enabler for delivering on business goals for application performance. As a buyer, it’s understandably difficult to separate the hype from the reality. I’d like to suggest a few points to consider as you map out your SDN strategy.

Implications for the Network

Network operators still need to design, provision, manage, operate and troubleshoot their network. While SDN offers greater simplicity, operators have to continue current network management functions and, at the same time, become educated on SDN developments. With SDN, new protocols and technologies will emerge. The investments you make in your network infrastructure today need to be flexible enough to see you through the next several years. There will be a hybrid model in the network. This means a mix of overlay technology and physical networks – and the demarcation points will depend on use cases for those overlays and the ability for the physical network to support these overlays. Network operators will have to understand the relationship between the two and be able to design networks appropriately with the right on-ramps and off-ramps. As the management of network shifts away from CLI and more towards orchestration platforms, the network interfaces and integration points (APIs) need to be clearly defined and deliver automation and agility.

Software Defined Networking represents the biggest change to the network in many years. What makes SDN interesting is the transformation that it can enable. Businesses are looking for more control over their applications on the network. SDN promises to deliver agility and simplification in the network to support applications. With SDN, the network becomes more efficient and agile, and an enabler for delivering on business goals for application performance. As a buyer, it’s understandably difficult to separate the hype from the reality. I’d like to suggest a few points to consider as you map out your SDN strategy.

Implications for the Network

Network operators still need to design, provision, manage, operate and troubleshoot their network. While SDN offers greater simplicity, operators have to continue current network management functions and, at the same time, become educated on SDN developments. With SDN, new protocols and technologies will emerge. The investments you make in your network infrastructure today need to be flexible enough to see you through the next several years. There will be a hybrid model in the network. This means a mix of overlay technology and physical networks – and the demarcation points will depend on use cases for those overlays and the ability for the physical network to support these overlays. Network operators will have to understand the relationship between the two and be able to design networks appropriately with the right on-ramps and off-ramps. As the management of network shifts away from CLI and more towards orchestration platforms, the network interfaces and integration points (APIs) need to be clearly defined and deliver automation and agility.

Monday, December 10, 2012

Achieving Single Touch Provisioning of Services Across The Network

Service provider networks are continually growing and evolving in order to provide a rich end user experience. Ethernet-based services are increasing rapidly to deliver high bandwidth and anytime access to video, voice, and broadband applications. In order to meet the needs of their customers, service providers have built complex networks consisting of thousand of high capacity Ethernet ports on devices in many locations. Typically these devices need to be provisioned, configured, and managed separately, which increases operating costs and time to deploy new services. The challenge is how to achieve single touch provisioning of services across the network.

Most factors contributing to the complexity of service provider networks revolve around the need to provision and manage separate physical platforms. The majority of the service provider’s OpEx cost results from the necessity to have the technical support infrastructure required to maintain and deploy services in a distributed network. Case studies show that by eliminating the need to manage individual devices separately can reduce the service provider’s OpEx by 70%.

Juniper Networks understands the challenges faced by service providers in today’s environment and has created a system called the Junos® Node Unifier (JNU) that enables centralized management, provisioning, and single touch deployment of services across thousands of Ethernet ports. The JNU solution is Juniper’s way to simplify today’s networks. JNU consists of satellite devices connected to a hub, where the satellites can be configured, provisioned, and managed from the hub device, giving a single unified node experience to network operators.

Most factors contributing to the complexity of service provider networks revolve around the need to provision and manage separate physical platforms. The majority of the service provider’s OpEx cost results from the necessity to have the technical support infrastructure required to maintain and deploy services in a distributed network. Case studies show that by eliminating the need to manage individual devices separately can reduce the service provider’s OpEx by 70%.

Juniper Networks understands the challenges faced by service providers in today’s environment and has created a system called the Junos® Node Unifier (JNU) that enables centralized management, provisioning, and single touch deployment of services across thousands of Ethernet ports. The JNU solution is Juniper’s way to simplify today’s networks. JNU consists of satellite devices connected to a hub, where the satellites can be configured, provisioned, and managed from the hub device, giving a single unified node experience to network operators.

Friday, December 7, 2012

Securing Virtualization in the Cloud-Ready Data Center

With the rapid growth in the adoption of server virtualization new requirements for securing the data center have emerged. Today’s data center contains a combination of physical servers and virtual servers. With the advent of distributed applications traffic often travels between virtual servers and might not be seen by physical security devices. This means that security solutions for both environments are needed. As organizations increasingly implement cloud computing security for the virtualized environment is as integral a component as traditional firewalls have been in physical networks.

Juniper's Integrated Portfolio Delivers a Solution

With a long history of building security products Juniper Networks understands the security requirements of the new data center, and Juniper’s solutions are designed to address these changing needs. The physical security portfolio includes the Juniper Networks SRX3000 and SRX5000 line of services gateways, and the Juniper Networks STRM Series Security Threat Response Managers. These physical devices are integrated with the Juniper Networks vGW Virtual Gateway software firewall that integrates with the VMware vCenter and the VMware ESXi server infrastructure.

Fundamental to virtual data center and cloud security is the control of access to virtual machines (VMs) and the applications running on them, for the specific business purposes sanctioned by the organization. At its foundation, the vGW is a hypervisor-based, VM safe certified, stateful virtual firewall that inspects all packets to and from VMs, blocking all unapproved connections. Administrators can enforce stateful virtual firewall policies for individual VMs, logical groups of VMs, or all VMs. Global, group, and single VM rules ensure easy creation of “trust zones” with strong control over high value VMs, while enabling enterprises to take full advantage of many virtualization benefits. vGW integration with the STRM and SRX Series provides a complete solution for the mixed physical and virtualized workloads.

Juniper's Integrated Portfolio Delivers a Solution

With a long history of building security products Juniper Networks understands the security requirements of the new data center, and Juniper’s solutions are designed to address these changing needs. The physical security portfolio includes the Juniper Networks SRX3000 and SRX5000 line of services gateways, and the Juniper Networks STRM Series Security Threat Response Managers. These physical devices are integrated with the Juniper Networks vGW Virtual Gateway software firewall that integrates with the VMware vCenter and the VMware ESXi server infrastructure.

Fundamental to virtual data center and cloud security is the control of access to virtual machines (VMs) and the applications running on them, for the specific business purposes sanctioned by the organization. At its foundation, the vGW is a hypervisor-based, VM safe certified, stateful virtual firewall that inspects all packets to and from VMs, blocking all unapproved connections. Administrators can enforce stateful virtual firewall policies for individual VMs, logical groups of VMs, or all VMs. Global, group, and single VM rules ensure easy creation of “trust zones” with strong control over high value VMs, while enabling enterprises to take full advantage of many virtualization benefits. vGW integration with the STRM and SRX Series provides a complete solution for the mixed physical and virtualized workloads.

Sunday, October 28, 2012

OpenStack Summit San Diego 2012

The OpenStack Summit in San Diego was the place to be last week. I attended and wanted to share my observations. There was a lot of participation and energy. It was sold out with over 1300 people attending and about 35 vendors displaying their products. Previous summits were developer forums. This time the format was expanded and there were hundreds of sessions in many categories including case studies, industry analysis as well as the usual develop sessions. See, http://openstacksummitfall2012.sched.org/ for a list of the session.

What is Openstack and What Does it Do?

OpenStack (www.openstack.org) is a foundation that manages an open source cloud computing platform. It was founded in 2010 out of a project that was started by NASA and RackSpace to build their cloud infrastructure. Their mission is “To produce the ubiquitous open source cloud computing platform that will meet the needs of public and private cloud providers regardless of size, by being simple to implement and massively scalable.” OpenStack focuses on the core infrastructure for compute, storage, images and networking. There is a large ecosystem of vendors providing tools do the things that OpenStack does not do.

OpenStacks consists of modules to configure cloud computing resources. The components are the Nova Compute Service, Swift Storage Service, Glance Image Service and Quantum Network Service. They automate the functions that are required to set up these services. OpenStack lets organizations quickly provision and reprovision compute resources. Even with virtualization it can take days to fully set up a virtual server, networking and storage. Organizations want this to happen in minutes whether they are offering a commercial service or an internal IT service.

What is Openstack and What Does it Do?

OpenStack (www.openstack.org) is a foundation that manages an open source cloud computing platform. It was founded in 2010 out of a project that was started by NASA and RackSpace to build their cloud infrastructure. Their mission is “To produce the ubiquitous open source cloud computing platform that will meet the needs of public and private cloud providers regardless of size, by being simple to implement and massively scalable.” OpenStack focuses on the core infrastructure for compute, storage, images and networking. There is a large ecosystem of vendors providing tools do the things that OpenStack does not do.

OpenStacks consists of modules to configure cloud computing resources. The components are the Nova Compute Service, Swift Storage Service, Glance Image Service and Quantum Network Service. They automate the functions that are required to set up these services. OpenStack lets organizations quickly provision and reprovision compute resources. Even with virtualization it can take days to fully set up a virtual server, networking and storage. Organizations want this to happen in minutes whether they are offering a commercial service or an internal IT service.

Thursday, October 11, 2012

Monetizing the Business Edge with Hosted Private Cloud

The last few years have seen major changes in enterprise networking, with the growth of MPLS virtual private networks (VPNs), and the rise in adoption of cloud services. A growing trend, which blends the two, is Hosted Cloud Services, delivered over MPLS VPNs from the service provider’s data center to the customer’s branch office locations. These services are facilitated and enhanced by Juniper’s combined routing and switching solutions as I discussed in my blog about the Junos Node Unifier.

Network Service Providers’ Opportunity with Cloud Services

NSPs are looking at new areas to boost revenue growth, and cloud computing is one of the key areas they are looking at. Based on research from STL Partner’s Telco 2.0 report, the Hosted Private Cloud market is expected to grow 34% annually to $6.5 billion by 2014. Since they already own the network, as well as the infrastructure around it including the billing and management systems, NSPs are in a good position to monetize the cloud opportunity. While the cloud services market is highly competitive, there is an opportunity for NSPs to differentiate their service offerings by leveraging their enterprise-grade VPN infrastructure to provide Hosted Private Cloud services with enhanced end-to-end security and service-level guarantees.

Public cloud services lack the SLA and QoS guarantee levels that enterprises have grown accustomed to with their VPN networks. Recent power outages associated with major public cloud service providers have impacted many popular sites and highlighted issues associated with reliance on public cloud services. As a result hosted private cloud services are emerging as a cost-effective and robust solution that offers quality and reliability for enterprise applications. Network service providers (NSPs) are embracing cloud services to grow new revenue streams and increase customer retention.

Network Service Providers’ Opportunity with Cloud Services

NSPs are looking at new areas to boost revenue growth, and cloud computing is one of the key areas they are looking at. Based on research from STL Partner’s Telco 2.0 report, the Hosted Private Cloud market is expected to grow 34% annually to $6.5 billion by 2014. Since they already own the network, as well as the infrastructure around it including the billing and management systems, NSPs are in a good position to monetize the cloud opportunity. While the cloud services market is highly competitive, there is an opportunity for NSPs to differentiate their service offerings by leveraging their enterprise-grade VPN infrastructure to provide Hosted Private Cloud services with enhanced end-to-end security and service-level guarantees.

Public cloud services lack the SLA and QoS guarantee levels that enterprises have grown accustomed to with their VPN networks. Recent power outages associated with major public cloud service providers have impacted many popular sites and highlighted issues associated with reliance on public cloud services. As a result hosted private cloud services are emerging as a cost-effective and robust solution that offers quality and reliability for enterprise applications. Network service providers (NSPs) are embracing cloud services to grow new revenue streams and increase customer retention.

Sunday, October 7, 2012

Innovating at the Edge in the Age of the Cloud

With the rise of Software as a Service and Social Media network service providers are witnessing a game changing shift in how consumer and business services and applications are delivered. Some service providers see the opportunity to break beyond their connection oriented business model and embrace these new cloud-based services. In order to do so they are looking for ways to adapt their networks to accommodate these new services.

Service Delivery on the Edge

In order to take advantage of the new business models brought about by the service transformation network service providers need to consider a number of factors, including adopting progressive business and monetization strategies and considering subscribers’ preferences in the service definition process. For the network service provider the most critical change is to leverage the underlying network architecture to support the new service offerings. As a result the traditional architecture of service provider edge networks is undergoing an aggressive period of evolution and shifting focus from simply a point of network connectivity to becoming a vital services creation and innovation point.

Subscriber Defined Services

Matching services and applications to customer expectations has always been a formidable challenge for telecom operators. However, it is now even more difficult given that the expectations of a telecom subscriber have changed drastically over the past few years. In the past consumer and business subscribers were tethered to the network services provider as their sole source of services, but today these subscribers have connections to OTT providers and access a variety of personal and business applications. An Important change is that these subscribers are not just service consumers, but they are also shaping service innovations by leveraging more intelligent and programmable platforms and devices.

Service Delivery on the Edge

In order to take advantage of the new business models brought about by the service transformation network service providers need to consider a number of factors, including adopting progressive business and monetization strategies and considering subscribers’ preferences in the service definition process. For the network service provider the most critical change is to leverage the underlying network architecture to support the new service offerings. As a result the traditional architecture of service provider edge networks is undergoing an aggressive period of evolution and shifting focus from simply a point of network connectivity to becoming a vital services creation and innovation point.

Subscriber Defined Services

Matching services and applications to customer expectations has always been a formidable challenge for telecom operators. However, it is now even more difficult given that the expectations of a telecom subscriber have changed drastically over the past few years. In the past consumer and business subscribers were tethered to the network services provider as their sole source of services, but today these subscribers have connections to OTT providers and access a variety of personal and business applications. An Important change is that these subscribers are not just service consumers, but they are also shaping service innovations by leveraging more intelligent and programmable platforms and devices.

Wednesday, October 3, 2012

Solving the Network Services Provisioning Challenge

Centralization of applications at large scale in a consolidated data center, and the increasing size of software-as-a-service application deployments, create the need to deploy thousands of 1GbE/10GbE ports to connect to servers and storage devices in the data center. Service providers need a way to terminate application servers without having to build layers of separately managed networking devices. They also need to be able to configure services from a central location and automate the provisioning of their network devices. This is driving the need for a device-based solution that can control thousands of network ports from a single point and that can interface with service orchestration systems.

The Services Provisioning Challenge

As service providers seek to deploy new cloud and network services at high scale, managing and maintaining individual network devices adds additional layers of operational complexity. As layers of network devices are added to the environment, service providers have to work with multiple management systems to provision, troubleshoot, and operate the devices. These additional layers may translate into additional points of failure or dependency reducing service performance. To meet this challenge Juniper Networks has released the Junos Node Unifier, a Junos OS platform clustering program that reduces complexity and increases deployment flexibility by centralizing management and automating configuration of switch ports attached to MX Series 3D Universal Edge Routers acting as hubs. You can visit the landing page for the product launch here, link.

Simplifying the Network

The Junos Node Unifier enables scaling up of applications in the data center by supporting a low cost method to connect network devices to a central hub. It reduces equipment and cabling costs and increases deployment flexibility by centralizing management and automating device configuration, while overcoming chassis limitations to enable the connection of thousands of switch ports to be attached to the MX Series platform. The Junos Node Unifier solution leverages the MX Series modular chassis-based systems as well as access platforms including Juniper Networks QFX3500 QFabric Node, Juniper Networks EX4200 Ethernet Switch and EX3300 Ethernet Switch, to be used as hub and satellites respectively. Junos Node Unifier leverages the full feature set of these devices to support multiple connection types at optimal rates, with increased interface density as well as support for L2 switching and L3/MPLS routing on the access satellites.

The Services Provisioning Challenge

As service providers seek to deploy new cloud and network services at high scale, managing and maintaining individual network devices adds additional layers of operational complexity. As layers of network devices are added to the environment, service providers have to work with multiple management systems to provision, troubleshoot, and operate the devices. These additional layers may translate into additional points of failure or dependency reducing service performance. To meet this challenge Juniper Networks has released the Junos Node Unifier, a Junos OS platform clustering program that reduces complexity and increases deployment flexibility by centralizing management and automating configuration of switch ports attached to MX Series 3D Universal Edge Routers acting as hubs. You can visit the landing page for the product launch here, link.

Simplifying the Network

The Junos Node Unifier enables scaling up of applications in the data center by supporting a low cost method to connect network devices to a central hub. It reduces equipment and cabling costs and increases deployment flexibility by centralizing management and automating device configuration, while overcoming chassis limitations to enable the connection of thousands of switch ports to be attached to the MX Series platform. The Junos Node Unifier solution leverages the MX Series modular chassis-based systems as well as access platforms including Juniper Networks QFX3500 QFabric Node, Juniper Networks EX4200 Ethernet Switch and EX3300 Ethernet Switch, to be used as hub and satellites respectively. Junos Node Unifier leverages the full feature set of these devices to support multiple connection types at optimal rates, with increased interface density as well as support for L2 switching and L3/MPLS routing on the access satellites.

Tuesday, July 24, 2012

Why Network Latency Matters for Virtualized Applications

My colleague Russell Skingsley has an interesting take on the effects of latency on virtualized application performance. The purpose of virtualization is to optimize resource utilization. As Russell pointed out it isn’t just an academic conversation. For the cloud hosting provider it’s about revenue maximization. Network latency has a direct impact on virtualized application performance and therefore revenue for the service provider. Your choice of network infrastructure will impact your business, but it can be difficult to see how investing in high performance networking solutions will improve your business. I will try to connect the dots.

Don't Sell it Just Once

It’s a service provider axiom that you don’t want to waste your valuable assets by selling them to a single customer, even at a premium. Take dark fiber for instance, every SP has come to learn that selling a fiber to a single customer will never bring scalable revenues. Making the move to adding DWDM to the service mix allows you to scale up the number of customers and is an improvement over selling dark fiber, but is limited by the number of lamdas that can fit in the spectrum on a single fiber. The scaling is linear. A similar situation exists for shared cloud computing resources.

Don't Sell it Just Once

It’s a service provider axiom that you don’t want to waste your valuable assets by selling them to a single customer, even at a premium. Take dark fiber for instance, every SP has come to learn that selling a fiber to a single customer will never bring scalable revenues. Making the move to adding DWDM to the service mix allows you to scale up the number of customers and is an improvement over selling dark fiber, but is limited by the number of lamdas that can fit in the spectrum on a single fiber. The scaling is linear. A similar situation exists for shared cloud computing resources.

Sunday, July 22, 2012

Is There a Real Use Case for LISP, The Locator/ID Separation Protocol?

LISP is a protocol that pops back in the news just when you might have forgot that it existed. It happened again in June when Cisco re-launched LISP for fast mobility at their annual user conference, complete with an on stage demo and much fanfare. While the demo’s are impressive, the history of LISP makes me wonder what is going on behind the curtain. This isn’t the first time that Cisco has proposed LISP for a new use case. In 2011, Cisco positioned LISP as a solution for IPv6 transition and virtual machine mobility, along with VXLAN and OTV, creating a triumvirate of proprietary protocols to further pioneering use cases. But is there real value in LISP?

What is LISP?

LISP is a protocol and an addressing architecture originally discussed at the IETF in 2006 to help contain the growth of the route tables in core routers. The LISP proposal which was submitted in 2010 is still under development as an experimental draft in the IETF, see link. As far as I have see there is not much consensus regarding the usefulness of LISP, and it has several open issues in the areas of security, service migration and deployability. Because the cost and risk associated with LISP are significant, network operators have scaled their routing systems using other techniques such as by deploying routers with sufficient FIB capacity, and by deploying NAT.

What is LISP?

LISP is a protocol and an addressing architecture originally discussed at the IETF in 2006 to help contain the growth of the route tables in core routers. The LISP proposal which was submitted in 2010 is still under development as an experimental draft in the IETF, see link. As far as I have see there is not much consensus regarding the usefulness of LISP, and it has several open issues in the areas of security, service migration and deployability. Because the cost and risk associated with LISP are significant, network operators have scaled their routing systems using other techniques such as by deploying routers with sufficient FIB capacity, and by deploying NAT.

Wednesday, June 13, 2012

Juniper’s Vision for SDN Enables Network Innovation

The importance of the network continues to grow and innovation in the network needs to support new businesses models, and drive social and economic change. SDN will help address challenges currently faced by the network as it evolves to support business applications. It is worthwhile to step back and determine what the big-picture problem is to be solved. SDN is about adding value and solving a problem that has not been solved. It is about helping network operators to combine best-of-breed networking equipment with SDN control to facilitate cost effective networks that provide new business opportunities is key to Juniper’s strategy. I’d like to elaborate on a few key points.

Juniper’s Vision For The New Network And Software-Defined Networking Are Aligned

I’ve talked in previous blogs about Juniper’s New Network Platform Architecture. Juniper is delivering the New Network to increase the rate of innovation, streamline network operating costs through automation, and reduce overall capital expenses. Legacy networks have grown large and complex. This has stifled innovation and made networks costly to build and maintain. Both the New Network and Software-Defined Networking (SDN) are about removing complexity, treating the network itself as a platform, and shifting the emphasis from maintenance to innovation.

SDN provides an abstracted, logical view of the network with externalized software-based control and reduced control points for better network control and simplified network operations. Juniper’s vision for SDN includes bi-directional interaction between the network and applications and a real-time feedback loop to ensure an optimal outcome for all elements and a predictable experience for users. This capability is transparent allowing customers to augment their existing network infrastructures to be SDN-enabled.

Juniper’s Vision For The New Network And Software-Defined Networking Are Aligned

I’ve talked in previous blogs about Juniper’s New Network Platform Architecture. Juniper is delivering the New Network to increase the rate of innovation, streamline network operating costs through automation, and reduce overall capital expenses. Legacy networks have grown large and complex. This has stifled innovation and made networks costly to build and maintain. Both the New Network and Software-Defined Networking (SDN) are about removing complexity, treating the network itself as a platform, and shifting the emphasis from maintenance to innovation.

SDN provides an abstracted, logical view of the network with externalized software-based control and reduced control points for better network control and simplified network operations. Juniper’s vision for SDN includes bi-directional interaction between the network and applications and a real-time feedback loop to ensure an optimal outcome for all elements and a predictable experience for users. This capability is transparent allowing customers to augment their existing network infrastructures to be SDN-enabled.

How the QFabric System Enables a High-Performance, Scalable Big Data Infrastructure

Big Data Analytics is a trend that is changing the way businesses gather intelligence and evaluate their operations. Driven by a combination of technology innovation, maturing open source software, commodity hardware, ubiquitous social networking and pervasive mobile devices, the rise of big data has created an inflection point across all verticals, such as financial services, public sector and health care, that must be addressed in order for organizations to do business effectively and economically.

Analytics Drive Business Decisions

Big data has recently become a top area of interest for IT organizations due to the dramatic increase in the volume of data being created and due to innovations in data gathering techniques that enable the synthesis and analysis of the data to provide powerful business intelligence that can often be acted upon in real time. For example, retailers can experience increased operational margins by responding to customer’s buying patterns and in the health industry, big data can enhance outcomes in diagnosis and treatment.

The big data phenomenon brings up a challenging question to CIOs and CTOs: What is the big data infrastructure strategy? A unique characteristic of big data is that, does not work well in traditional Online Transaction Processing (OLTP) data stores or with structured query language (SQL) analysis tools. Big data requires a flat, horizontally scalable database, accessed with unique query tools that work in real time. As a result of this requirement IT must invest in new technologies and architectures to utilize the power of real-time data streams.

Analytics Drive Business Decisions

Big data has recently become a top area of interest for IT organizations due to the dramatic increase in the volume of data being created and due to innovations in data gathering techniques that enable the synthesis and analysis of the data to provide powerful business intelligence that can often be acted upon in real time. For example, retailers can experience increased operational margins by responding to customer’s buying patterns and in the health industry, big data can enhance outcomes in diagnosis and treatment.

The big data phenomenon brings up a challenging question to CIOs and CTOs: What is the big data infrastructure strategy? A unique characteristic of big data is that, does not work well in traditional Online Transaction Processing (OLTP) data stores or with structured query language (SQL) analysis tools. Big data requires a flat, horizontally scalable database, accessed with unique query tools that work in real time. As a result of this requirement IT must invest in new technologies and architectures to utilize the power of real-time data streams.

Tuesday, June 5, 2012

Juniper’s New Network Platform Architecture

If you talked with me at Interop, Las Vegas last week you heard me speak of Juniper’s New Network Platform Architecture. The New Network Platform Architecture is an initiative that brings together Juniper’s innovations in silicon, software, and systems to deliver best-in-class network designs that enable business advantages for our customers. This is a milestone for Juniper, and it shows the commitment that we have to delivering value to our customers to help them compete in today’s market place.

Juniper’s Focus on the Customer

Juniper’s approach to designing network architectures is to solve today’s business and technology limitations with designs that deliver greater efficiency, increased business value, better performance, and opportunity that will last. With a focus on our customer’s business objectives, the demands of their applications, and workflow needs, our approach is to design architectures that lift legacy limitations and transform our customer’s expectations for the network. Our objective is to drive business value for our customers by optimizing their network investments. We simplify architectures, operation models, and workflows to optimize network investments. By using our domain designs customer can optimize their network investments to increase productivity, generate revenue, and enhance the quality of the user experience.

The Changing Environment

With soaring growth in bandwidth demand, mobile consumer devices, cloud, and M2M, network needs and pace of innovation have changed dramatically, and Juniper is enabling our customers to grow and capture the opportunity. Our differentiation is our ability to take complex architectures, legacy operating systems, and simplify, modernize, and scale for our customers. From core routing to data centers, Juniper has consistently delivered breakthrough innovations leveraging our expertise in silicon, systems, and software to help our customers increase efficiency, drive business value, and accelerate service delivery.

Juniper’s Focus on the Customer

Juniper’s approach to designing network architectures is to solve today’s business and technology limitations with designs that deliver greater efficiency, increased business value, better performance, and opportunity that will last. With a focus on our customer’s business objectives, the demands of their applications, and workflow needs, our approach is to design architectures that lift legacy limitations and transform our customer’s expectations for the network. Our objective is to drive business value for our customers by optimizing their network investments. We simplify architectures, operation models, and workflows to optimize network investments. By using our domain designs customer can optimize their network investments to increase productivity, generate revenue, and enhance the quality of the user experience.

The Changing Environment

With soaring growth in bandwidth demand, mobile consumer devices, cloud, and M2M, network needs and pace of innovation have changed dramatically, and Juniper is enabling our customers to grow and capture the opportunity. Our differentiation is our ability to take complex architectures, legacy operating systems, and simplify, modernize, and scale for our customers. From core routing to data centers, Juniper has consistently delivered breakthrough innovations leveraging our expertise in silicon, systems, and software to help our customers increase efficiency, drive business value, and accelerate service delivery.

Thursday, October 27, 2011

Leveraging Converged Infrastructure To Deliver Microsoft Exchange 2010

The Critical Communications Tool

While we hear a lot about new communications technologies for most of us email is the tool that we use everyday. For end users email is still the most efficient way to communicate globally but for the IT department email can be a difficult application to deploy and manage. Guaranteeing performance and availability for an increasingly mobile workforce, while keeping costs in check is a challenge. Organizations are challenged with the time required to manage email delivery systems. Time is spent on installation and configuration of software and the supporting infrastructure. Much of an organization staff can be consumed by routine tasks driving up the cost of ownership. It can be difficult to determine what infrastructure is required to scale up as the number of users increases. As email becomes more feature rich supporting large numbers of power users creates increasing demands on the systems. Organizations want predictable operation and support for their entire solution. They want to be sure that they are maximizing system resource utilization and eliminating server underutilization while delivering a superior user experience.

Making the Move to Exchange 2010

With Exchange Server 2010, Microsoft has made significant changes in the architecture to address the growing need of businesses to increase mailbox quotas, drive down storage and IT costs, provide a high degree of availability, meet regulatory requirements for data retention and compliance, and enhance the productivity. To take advantage of the new HA, DR and archiving functionalities, IT organizations may have to look at a new platform, and re-architect the Exchange environment. Changes in how storage is managed creates the incentive to change the storage infrastructure. The new database availability group (DAG) functionality requires Microsoft Windows Server 2008 Enterprise Edition, requiring an upgrade to 64-bit server hardware. The Single Instance Storage (SIS) feature that reduced redundancy by storing only a single copy of an email or attachment has been eliminated increasing storage requirements.

While we hear a lot about new communications technologies for most of us email is the tool that we use everyday. For end users email is still the most efficient way to communicate globally but for the IT department email can be a difficult application to deploy and manage. Guaranteeing performance and availability for an increasingly mobile workforce, while keeping costs in check is a challenge. Organizations are challenged with the time required to manage email delivery systems. Time is spent on installation and configuration of software and the supporting infrastructure. Much of an organization staff can be consumed by routine tasks driving up the cost of ownership. It can be difficult to determine what infrastructure is required to scale up as the number of users increases. As email becomes more feature rich supporting large numbers of power users creates increasing demands on the systems. Organizations want predictable operation and support for their entire solution. They want to be sure that they are maximizing system resource utilization and eliminating server underutilization while delivering a superior user experience.

Making the Move to Exchange 2010

With Exchange Server 2010, Microsoft has made significant changes in the architecture to address the growing need of businesses to increase mailbox quotas, drive down storage and IT costs, provide a high degree of availability, meet regulatory requirements for data retention and compliance, and enhance the productivity. To take advantage of the new HA, DR and archiving functionalities, IT organizations may have to look at a new platform, and re-architect the Exchange environment. Changes in how storage is managed creates the incentive to change the storage infrastructure. The new database availability group (DAG) functionality requires Microsoft Windows Server 2008 Enterprise Edition, requiring an upgrade to 64-bit server hardware. The Single Instance Storage (SIS) feature that reduced redundancy by storing only a single copy of an email or attachment has been eliminated increasing storage requirements.

Tuesday, August 30, 2011

The VCE Vblock FastPath Desktop Virtualization Platform

Organizations face increasing costs and security concerns created by the quantity and diversity of devices accessing the network, but they must still respond to the growing needs of the business. Now organizations can rapidly deploy a more secure, cost effective and flexible desktop environment using the Vblock FastPath Desktop Virtualization Platform. See this video to learn how the you can deploy virtual desktops faster.

The Vblock FastPath Desktop Virtualization Platform is a purpose-built solution that helps IT organizations to automate desktop and application management by enabling rapid deployment, reducing costs and improving security through centralization of the desktop environment.

Backstory: I wrote the script for this video and worked with the producer to get it completed. It was done for the launch of the FastPath solution for which I was the marketing manager.

The Vblock FastPath Desktop Virtualization Platform is a purpose-built solution that helps IT organizations to automate desktop and application management by enabling rapid deployment, reducing costs and improving security through centralization of the desktop environment.

Backstory: I wrote the script for this video and worked with the producer to get it completed. It was done for the launch of the FastPath solution for which I was the marketing manager.

Sunday, August 28, 2011

Solving The Desktop Virtualization Challenge With Vblock FastPath

The Desktop Challenge

The traditional workplace experience used to be working at the office on a PC. Now, the workplace could be the office, the home, or any public location. Now we have more than PC's. Work tools also include tablets and smart phones. Network users could be anywhere, on any kind of device, and they'll need access to all forms of rich media like real time interactive video as well as voice. As a result of these changes organizations are faced with growing security concerns around access to applications and control over corporate data.

Virtualization has been used extensively over the last decade to consolidate servers, improve resource utilization, reduce power consumption, lower costs, and streamline server management. Many of the same issues around cost, complexity, and energy efficiency impact desktop users and IT administrators. Conventional desktop computing approaches are evolving to address an increasing number of regulatory requirements such as Sarbanes-Oxley, and HIPAA. We all know that data breeches are a major concern for corporations. Organizations are trying to protect data, and to simplify desktop management , while meeting the needs of users who demand greater mobility, more devices, and increased flexibility.

As a result of these challenges with desktop management organizations are looking for ways to deploy a more secure, cost effective and flexible desktop environment using Virtual Desktop Infrastructure. With VDI organizations can centrally manage desktop images, ensuring a uniform experience for users no matter what platform they are using or where they are locates. With VDI company wide security posture is improved by keeping critical information in the corporate data center, not on the PC. Costs are reduced through centralized desktop management.

The traditional workplace experience used to be working at the office on a PC. Now, the workplace could be the office, the home, or any public location. Now we have more than PC's. Work tools also include tablets and smart phones. Network users could be anywhere, on any kind of device, and they'll need access to all forms of rich media like real time interactive video as well as voice. As a result of these changes organizations are faced with growing security concerns around access to applications and control over corporate data.

Virtualization has been used extensively over the last decade to consolidate servers, improve resource utilization, reduce power consumption, lower costs, and streamline server management. Many of the same issues around cost, complexity, and energy efficiency impact desktop users and IT administrators. Conventional desktop computing approaches are evolving to address an increasing number of regulatory requirements such as Sarbanes-Oxley, and HIPAA. We all know that data breeches are a major concern for corporations. Organizations are trying to protect data, and to simplify desktop management , while meeting the needs of users who demand greater mobility, more devices, and increased flexibility.

As a result of these challenges with desktop management organizations are looking for ways to deploy a more secure, cost effective and flexible desktop environment using Virtual Desktop Infrastructure. With VDI organizations can centrally manage desktop images, ensuring a uniform experience for users no matter what platform they are using or where they are locates. With VDI company wide security posture is improved by keeping critical information in the corporate data center, not on the PC. Costs are reduced through centralized desktop management.

Tuesday, August 16, 2011

The Vblock Trusted Multi-Tenancy Model for Cloud Services

Cloud computing offers many economic and environmental advantages to service providers. The ability to deliver infrastructure services to multiple internal or external consumers is a core component of cloud computing. With shared virtual converged infrastructure and best-of-class network, compute, storage, virtualization, and security technologies from Cisco, EMC,and VMware, the Vblock platform presents new opportunities for service providers to deliver secure dedicated services to multiple tenants. In this video you will learn how Vblock Trusted Multi-Tenancy (TMT) from VCE enables service providers to address the key concerns of tenants in the multi-tenant environment – confidentiality, security, compliance, service levels, availability, data protection, and management control.

Backstory: I wrote the script for this Flash video and worked with the producer to get it created.

Backstory: I wrote the script for this Flash video and worked with the producer to get it created.

Subscribe to:

Posts (Atom)